Advancing the novel object test: 3D printing and deep learning

To improve standardisation and consistency in the novel object test, Spry et al designed the 'CapTouch' system: capacative objects that can automatically register interaction. But what about deep learning in EthoVision XT?

Posted by

Published on

Wed 17 Nov. 2021

Topics

| Cognition | EthoVision XT | Novel Object Test |

Recently, we updated our white paper on novel object recognition. This white paper also approached the dilemma of the use of the actual objects, and the many inconsistencies and lack of object standardization that exists within and between labs. This is exactly what Spry et al tried to tackle in a recent publication by investigating the use of 3D-printed objects [1].

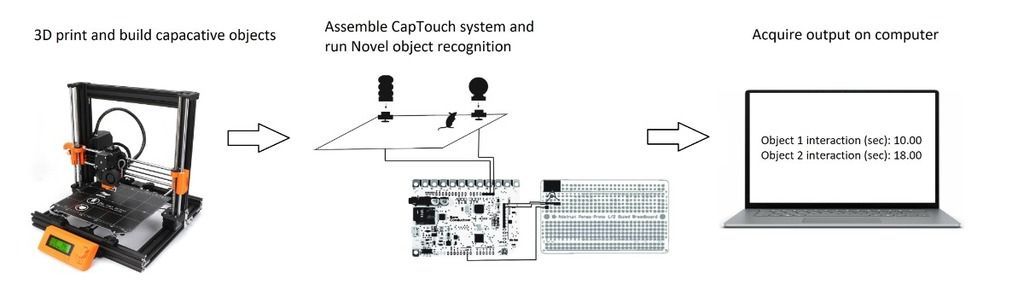

3D-printing capacitive objects

Spry et al provide a proof-of-principle demonstration that employing 3D-printed capacitive objects is a cost-effective, reliable, and precise way to perform object recognition tasks when compared with manual scoring. This novel approach could ultimately contribute to a more standardized approach to object recognition tasks, which would greatly improve reliability in basic and applied neurobehavioral research.

Quick recap

Object recognition typically involves familiarizing a subject animal with a set of objects and then presenting a novel object or displacing an object to a novel location. Time spent exploring the novel and/or displaced object can be used to infer learning and memory.

The ‘CapTouch’ system

Two versions of the innovative ‘CapTouch’ system, with an estimated cost of about $85, were implemented: version 1.0 used conductive 3D-printer filament, and version 2.0 used traditional printing filament combined with copper tape. This second version was implemented to provide additional lower-cost objects in more common printing materials (for example PLA and PETG).

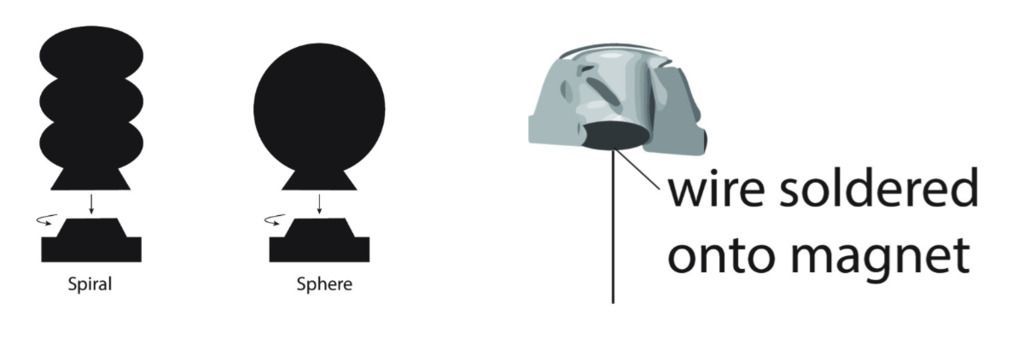

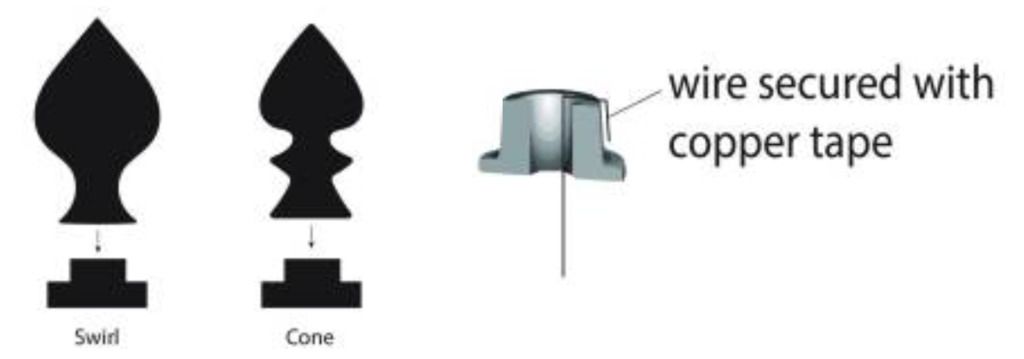

CapTouch 1.0

Objects and their bases for CapTouch 1.0 were printed with conductive filament and connected to a touch board using solid core wire which was attached to a within-object magnet. This then could be connected to header pins on the touch board. A twist-off design ensured easy removal and attachment of the object to the base.

CapTouch 2.0

The second iteration of the CapTouch was printed in a non-conductive filament. These objects were hollow, and copper foil tape was placed inside to serve as the capacitive sensor and were simply attached to the base by a simple peg-in-hole design.

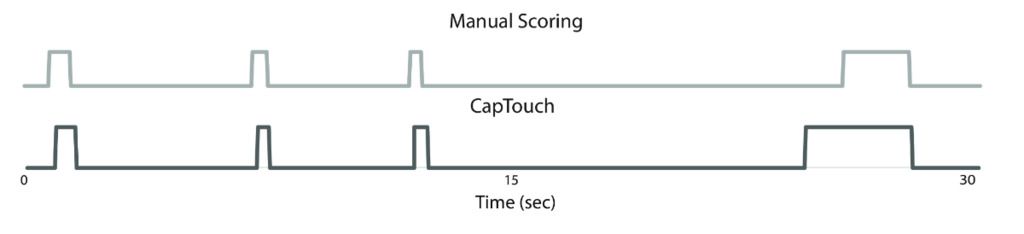

Reliable system

Both the CapTouch 1.0 and 2.0 showed a high positive correlation when compared against manual scoring from multiple scorers, indicating the CapTouch system is a reproducible and viable method regardless of the iteration used.

Trained neural networks - EthoVision XT 16

Spry et al state that current video-based automated algorithms can often be unreliable whereas manual scoring of object investigation is time consuming, tedious, and more subjective. Although Spry et al make a comparison between manual scoring and their new capacitive-based system, they don’t compare their system to video-based tracking algorithms.

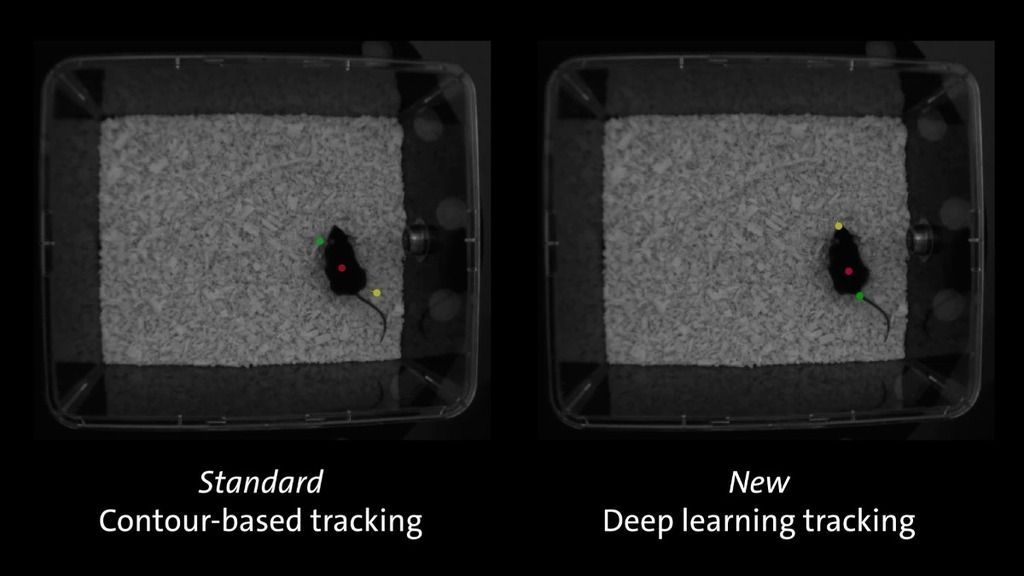

Contour-based video tracking can indeed cause for some inconsistencies, especially when subjects are lost during tracking due to objects present in the arena. EthoVision XT 16 however has introduced deep learning based tracking, which uses trained neural networks that has drastically improved the detection of body points (nose point, centre and tail base). Particularly in novel object recognition tests, the detection of object interaction has resulted in unsurpassed video tracking quality.

A recent webinar by Alicia Brantley, PhD, dives into this topic, and shows how with this new feature, the novel object test can be further standardized and improved. Other key topics of this webinar include:

- Defining novel object test procedures and goals

- Understanding primary challenges to reproducibility in novel object tests

- Successfully utilizing behavioral tracking software to increase reliability in novel object tests

Advancing research

The CapTouch system proved that technological advancements can indeed contribute to improving scientific quality through standardisation. Future improvements on this system are however warranted since limitations lie in the filament (printing material), but also in the criteria of object exploration. Although there was a high correlation, the capacitive objects did detect slightly less interaction than manual scoring due to a greater requirement for direct physical contact.

This discrimination can be made using EthoVision's trained neural network. Using video tracking in EthoVision XT 16, which is basically a turn-key system, does not require constructing capacitive objects, a skill that is not native to every researcher.

Taken together, research like this current paper by Spry et al are important steps in advancing our understanding of animal models and their basic neurobehavioral mechanisms. Output resolution only increases as technology advances, and gives us more insight into these workings.

References

1. Spry, K. P.; Fry, S. A.; DeFillip, J. M. S.; Drye, S. G.; Stevanovic, K. D.; Hunnicutt, J.; Bernstein, B. J.; Thompson, E. E.; Cushman, J. D. (2021). 3D-Printed Capacitive Sensor Objects for Object Recognition Assays. eNeuro, 8 (1), 1–9

Related Posts

5 proven ways to measure spatial learning in rodents