Machine learning and object detection

Humans are incredibly good at recognizing patterns. Now computers can do it as well, and that can be useful. Read more about machine learning and object detection.

Posted by

Published on

Thu 14 Nov. 2019

Topics

| Human Factors | UX Research |

Imagine two circles next to each other. Then imagine a horizontal line underneath the two circles. You cannot help it, your brain sees a face in that simple pattern. Pattern recognition is something that human minds are incredibly good at, enabling us to find meaning from very sparse information but also to interpret rich and complex patterns. Not only do we recognize something looking like a face when we see one, we can separate the images of thousands of different faces, and remember the names of at least hundreds. Some of us are better at that than others!

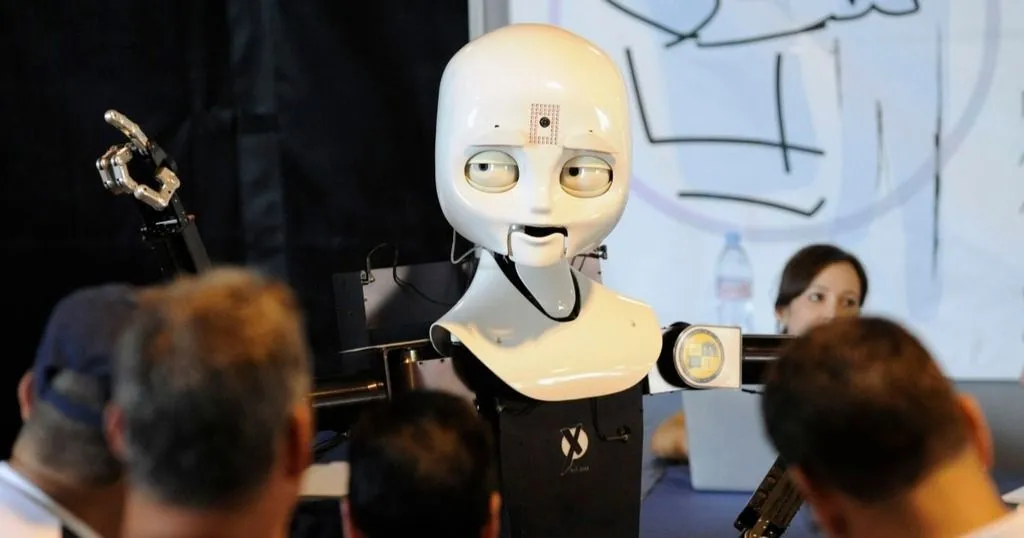

Machine learning and object detection

This is not a uniquely human ability, other species can also recognize the different faces of animals they know and domesticated animals are even quite good at distinguishing human faces. Computer-vision based machine learning is all about trying to make software which has similar abilities.

There isn't a computer program that can handle anything like the range of different types of images that we can, but there are many AI programs which are getting really good at recognizing specific things. For bird watchers and other naturalists there are websites which you can upload photos to and it will not only tell you what the plant or animal is, but also how confident it is in its guess.

For psychologists there are programs which look at the image of a human face and tell you what emotion is being shown. Noldus' FaceReader is a good example. For farm robots there are programs which look at peppers and determine if they are ripe and then tell the robot how to place its claw to pick them without damaging them.

Automatic object detection

Noldus is very interested in this computer vision technology, not only in emotion recognition but also in a number of other applications. One of them is object detection and recognition in the field of human factors or user experience research. In a complex environment like a plane's cockpit or ship's bridge, there are many different elements like a radar screen or control lever. For the researcher to label those by hand in a video is an awful lot of work.

Where this technology becomes really interesting and useful is when that object detection technology is combined with eye-tracking technology. That is exactly what Noldus has been working on in the ACROSSING project. That means that we can automatically determine not only that a ship's captain is looking towards the right of the bridge, but that he or she is looking at the radar screen.

And we can measure that both when the captain is walking around and when the radar display is switched to a different monitor. The AI-trained computer vision can recognize a radar screen wherever it is in the video image. Furthermore, because this is a specialized application trained with a specific set of images, if there are two different types of radar, say weather and ships, it can learn to distinguish them.

RESEARCH PROJECT: Want to partner with us?

If you are setting up a research project and think that Noldus could be a partner in your consortium, let us know!

- Develop solutions in consortia

- Co-develop

- Trust our 35+ years of experience

Egocentric vision

With traditional eye tracking, this is only possible with a fixed eye tracker, for instance, underneath a computer monitor. The head doesn't move with respect to the screen, and the system knows what is shown on the screen at a given time, so it can work out that if someone looks at the top right of a certain web page, that they are looking at the company logo. That is not so difficult with a fixed monitor and a person who is sitting still, but as soon as the person gets up and starts walking around, 'top right' of their field of view means something different from one minute to the next and the images shown on the monitor can be captured separately.

Eye tracking glasses still record where someone is looking and also make a video of what they see (this is called 'egocentric vision'), but translating those two things into information about what they are looking at is an expensive task. That is where the automatic object detection technology comes into play. It is used to analyze the egocentric video and detect if there is (for instance) a radar screen in view and where it is in 3D space.

Deep learning

The technology works using deep convolutional neural networks to perform the object detection on each video frame. The network is trained on pre-defined classes of objects such as a generic monitor or sub-classes, for example monitors showing the radar.

At test time, it shows the objects it has found by annotating bounding boxes around them, signalling their areas of interest automatically without any effort by the researcher, as shown in the picture at the top of this blog. Most importantly, the network can detect multiple objects at the same time, from a single video frame. At the same time, the eye tracker is still measuring where the person wearing the eye tracking glasses are looking. The coordinates from the eye tracker and the coordinates of the detected objects are transformed so that they are in the same coordinate space as each other.

All those pieces of technology amount to a system that synchronizes a person’s gaze with the objects found in the video and documents the contents of the areas of interest that captured the focus of the user.

Valuable for designers

This is not trivial, our colleague who developed this wrote over a million lines of code in his work in the ACROSSING project. Was it worth it? Actually, this is very valuable information. It lets researchers answer questions such as how often a ship's captain looks out of the window (or is he or she too absorbed in watching the instruments?), how systematically the bridge staff scan all the different sources of information, and if they noticed a warning lamp. That knowledge is critical both in designing safe and usable systems and in training new crew.

Acknowledgments

The work described here was carried out by Georgios Kapidis in the ACROSSING project, which was funded by the Horizon 2020 program of the European Union as a Marie Skłodowska-Curie Innovative Training Network, project number 676157. The specific work on the ship's bridge simulator was carried out in cooperation with MARIN.

References

The following papers give more details about the technology developed:

G. Kapidis, R. Poppe, E. van Dam, L. P. J. J. Noldus, and R. C. Veltkamp, “Multitask Learning to Improve Egocentric Action Recognition,” in IEEE International Conference on Computer Vision (ICCV) Workshops, 2019.

G. Kapidis, E. van Dam, R. Poppe, L. P. J. J. Noldus, and R. C. Veltkamp, “Action Detection from Egocentric Videos in Daily Living Scenarios,” in Measuring Behavior 2018, Manchester, UK, 2018, pp. 405 – 407.

G. Kapidis, R. Poppe, E. van Dam, L. P. J. J. Noldus, and R. C. Veltkamp, “Object Detection-Based Location and Activity Classification from Egocentric Videos; A Systematic Analysis,” in Smart Assisted Living, Springer, 2019.

Related Posts

Human-robot interaction: Can you trust a robot?

3 reasons to join us at Applied Human Factors & Ergonomics meeting (DriveLab)