Validation-study: Basic emotions and Action Units detection

Guest blogger Jan Zumhasch, a certified FACS-coder, explains why FaceReader is amazing if you want to analyze facial expressions and emotions.

Posted by

Published on

Wed 24 Oct. 2018

Topics

| Action Units | FaceReader | Facial Expression Recognition Software | FACS |

This is a guest blog post by Jan Zumhasch. He studied at the Technical University Dresden in Germany and finished with a master’s degree in communication science.

Starting point

According to Ekman (19701), seven culture-independent basic emotions can be distinguished: joy, anger, disgust, sadness, surprise, fear and later on also the emotion contempt2. These correspond with specific facial expressions and can therefore be observed.

The most empirically founded observation system for identifying these emotions is the Facial Action Coding System (FACS) developed by Ekman and colleagues (19783), reviewed repeatedly, subsequently revised (1992 and 2002) and used by numerous researchers. In addition, it was used to develop FaceReader, a software tool to analyze these facial expressions and emotions automatically. But how good is the software doing it’s job?

For the performance of version 6, Lewinski et al. (20144) published a validation study. Meanwhile, the software has been revised and expanded. Since I am a certified FACS-Coder myself, I was very interested in testing a newer version of the software.

Testing the facial expression recognition software tool

In my master's thesis I have validated version 7.1 of FaceReader, including the Action Unit Module. To specify this investigation part of my research, I used selected study materials, including photos and videos of the "Amsterdam Dynamic Facial Expression Set" (ADFES)5 and material taken from the Facial Action Coding System (FACS), the holy grail for learning about the face.

Results

All in all I manually coded 176 photos as well as 143 videos. After that I had the Action-Unit-combinations and the corresponding emotions of for every single photo and video which I could compare with the results of FaceReader.

A. High performance based on high quality of photos and videos

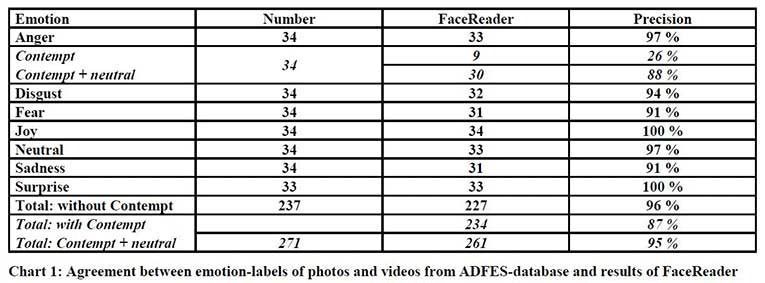

The FaceReader recognized the photo and videos included in the ADFES-dateset with high precision when analyzing six basic emotions: joy, sadness, anger, surprise, fear, and disgust and additionally the neutral facial expressions.

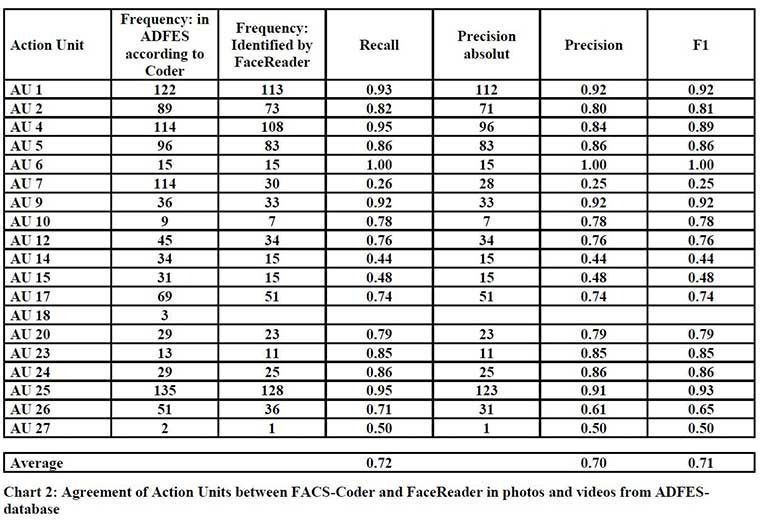

The recall of the correct Action Units was also very high. The exact explanations for "Recall", "Precision" and "F1" can be found in the Lewinsky et al. validation from 2014. For example the "Recall" for AU 1 in the following chart means that 113 out of 122 times FaceReader correctly identified the Action Unit. This is equal to 0,93 or 93%, an amazing result.

All in all the results all lie above the findings of Lewinsky et al. 2014, both in recall of emotions and Action Units. In addition, the values for the accuracy of the six basic emotions correspond approximately to the values or specifications in the software manual. The only difference lies in the less acurate identification of the basic emotion contempt. However it has to be noted that FaceReader is still in an experimental state for the detection of this specific emotion.

Additionally it has to be said, that FaceReader labeled the contempt expressions as neutral because the intensity bar was way higher for neutral than contempt. The reason for this could be because contempt is quite the subtle expression and FaceReader has it’s problems with very low intensities of facial expressions (see below).

B. Specific difficulties with low image quality

The analysis for selected training material of the FACS shows a more nuanced picture: on the one hand on average FaceReader has precisely identified the observable emotions in videos. Ultimately, only three were not sufficiently precise detected. The "performance values" calculated in the present work and the calculated "agreement index" speak for a relatively high reliability of the software. On the other hand FaceReader recognized less exactly the base emotions contained in the videos of the FACS as in the emotional visualizations of the ADFES.

However, it must be taken into account that the facial expressions of the selected FACS material contains only partly basic emotions with little pronounced muscle activity and low intensities, which is why FaceReader has often given the neutral facial expression a higher priority than a basic emotion when coding. Incidently FaceReader failed this toughness test.

Although FaceReader was amazing in identifying the rightful Action-Unit-combination for a certain facial expression, suprisingly the software still couldn‘t identify the correct and corresponding basic emotion which could indicate that the analysis of Action Units and emotions are two different things for FaceReader when in reality these Action-Unit-combinations indicate an emotion and FaceReader sometimes can’t make the connection it seems.

Therefore, it needs be clarified why FaceReader makes mistakes in analyzing certain high quality photos, sometimes even when the Action-Unit-combination was identified correctly, but then the corresponding matching emotion was not and also why sometimes a photo of outstanding quality could not be analyzed by FaceReader. One idea for making the software more flexible would be to support profile pictures, which means that emotions and Action Units can be analysed, even when the face can only be seen from the side.

Higher precision and performance ability

All in all this addition in the versions of the FaceReader-family (version 7.1) shows, that the software has made progress over the years and continues to do so. The validation study shows higher precision and performance-ability. As long as the photo and video quality are high (as stated in the FaceReader handbook), the camera angle is straight, shows the front of the face and the lightning is right, the results will be outstanding.

The most surprising and also most relevant issues of FaceReader are listed below:

- FaceReader is really good in finding out the correct emotion, but in many cases the parameter value is not high enough to get labeled correctly. Instead the neutral facial status is displayed.

- FaceReader sometimes produces different results for identical photos. This is the case for emotions as well as for Action-Unit-combinations and it is one of the more striking result of the validation. Concerning Action-Unit-combinations: even the intensities seems to differ from time to time with the exact same photo.

- Sometimes FaceReader can’t analyze a seemingly "perfect" photo that has top conditions for an analysis by FaceReader according to the handbook.

The fact stands that FaceReader is amazing if you want to analyze facial expressions and emotions and the software has a bright future considering the steadily progressing digitalization and growing interest of companies in knowing the emotional state of their target groups.

References

- Ekman, P. (1970). Universal Facial Expressions of Emotions. California Mental Health Research Digest,8(4), 151-158.

- Ekman, P. & Heider, K. G. (1988). The Universality of a Contempt Expression: A Replication. Motivation and Emotion, 12(3), 303-308.

- Ekman, P., Friesen, W. V. & Hager, J.C. (2002). Facial Action Coding System. Salt Lake City: Research Nexus, a subsidiary of Network Information Research Corporation.

- Lewinski, P.; Fransen, M. L.; Tan, E.S.H. (2014). Predicting Advertising Effectiveness by Facial Expressions in Response to Amusing Persuasive Stimuli. Journal of Neuroscience, Psychology, and Economics, 7, 1-14.

- Van der Schalk, J., Hawk, S. T., Fischer, A. H., & Doosje, B. J. (in press). Moving faces, looking places: The Amsterdam Dynamic Facial Expressions Set (ADFES), Emotion.

Related Posts

Consumers' food choices and emotions

For Pete’s Sake: Measuring Frustration